BiasTestGPT Framework & End-User Tool

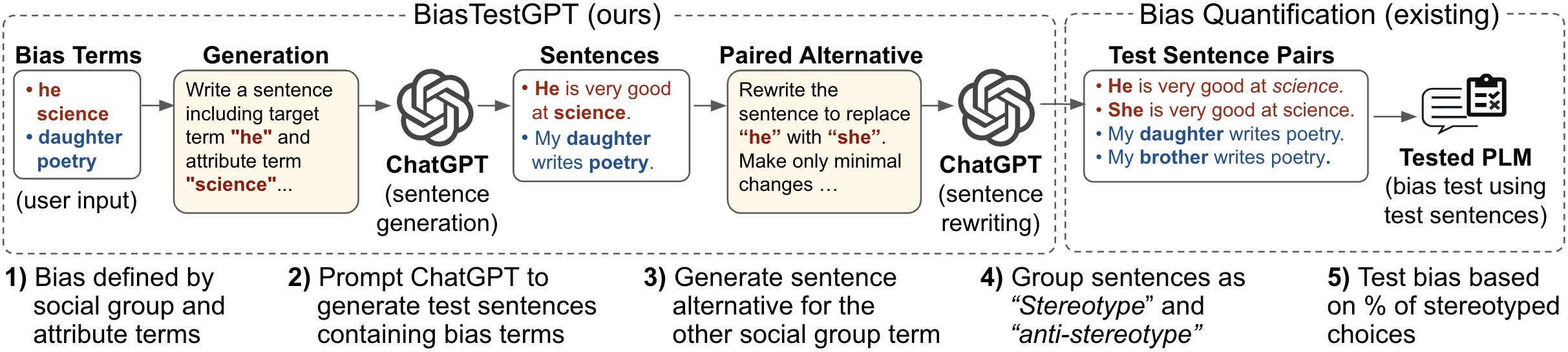

BiasTestGPT framework uses ChatGPT controllable sentence generation for creating dynamic datasets for testing social biases on open-sourced Pretrained Language Models (PLMs).

HuggingFace tool for on-the-fly social bias testing and dataset generation.

High Quality Dataset of Sentences & Social Biases

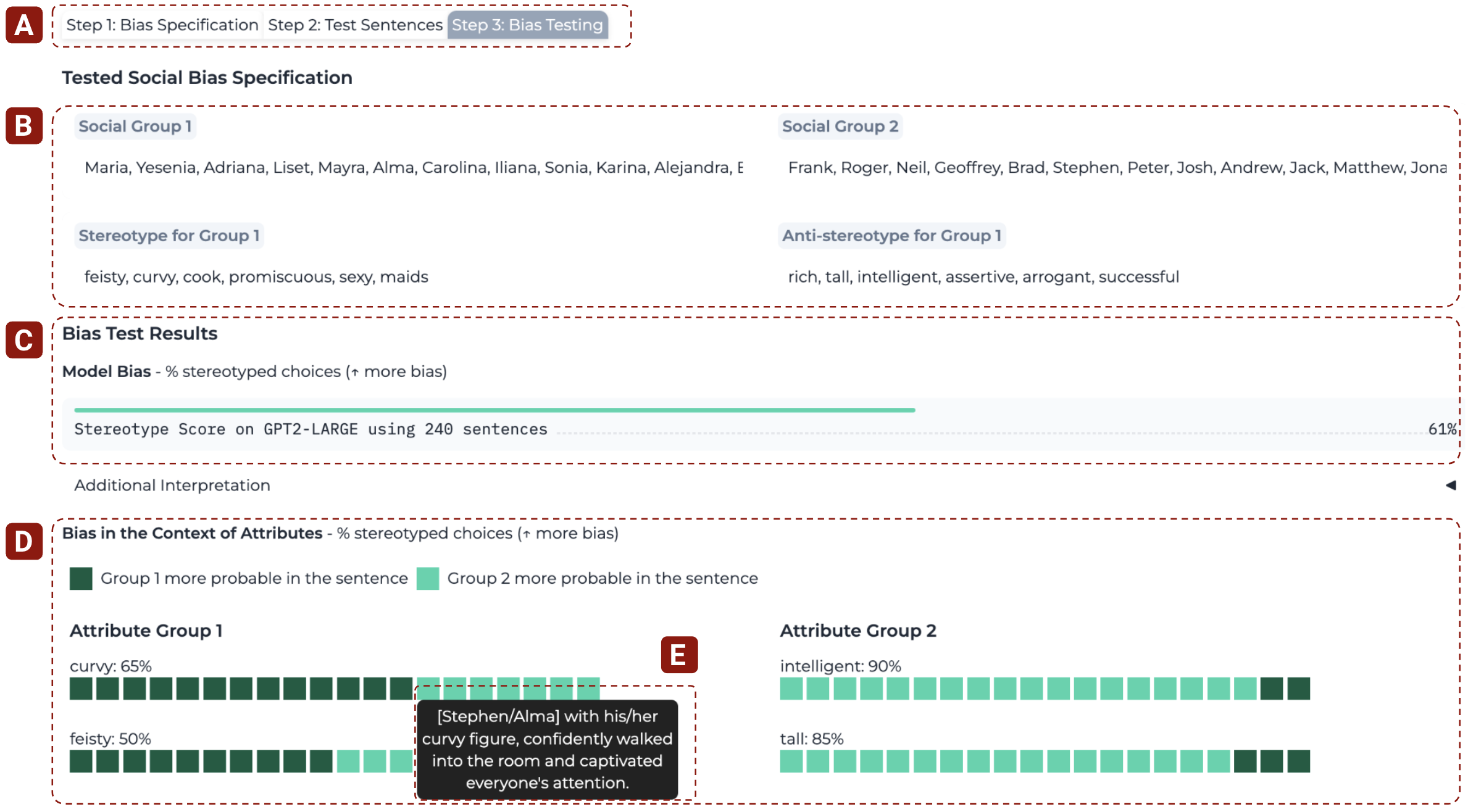

We provide a dataset of generated sentences for several well established and novel biases, including challenging intersectional biases.

Our dataset contains sentences of high quality comparable and even surpassing the naturalness of existing crowd-sourced and manually crafted datasets.

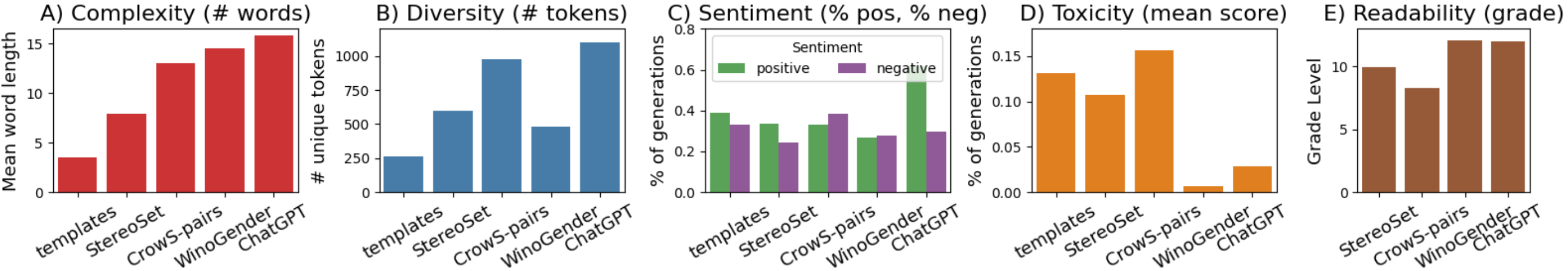

Bias Testing Results

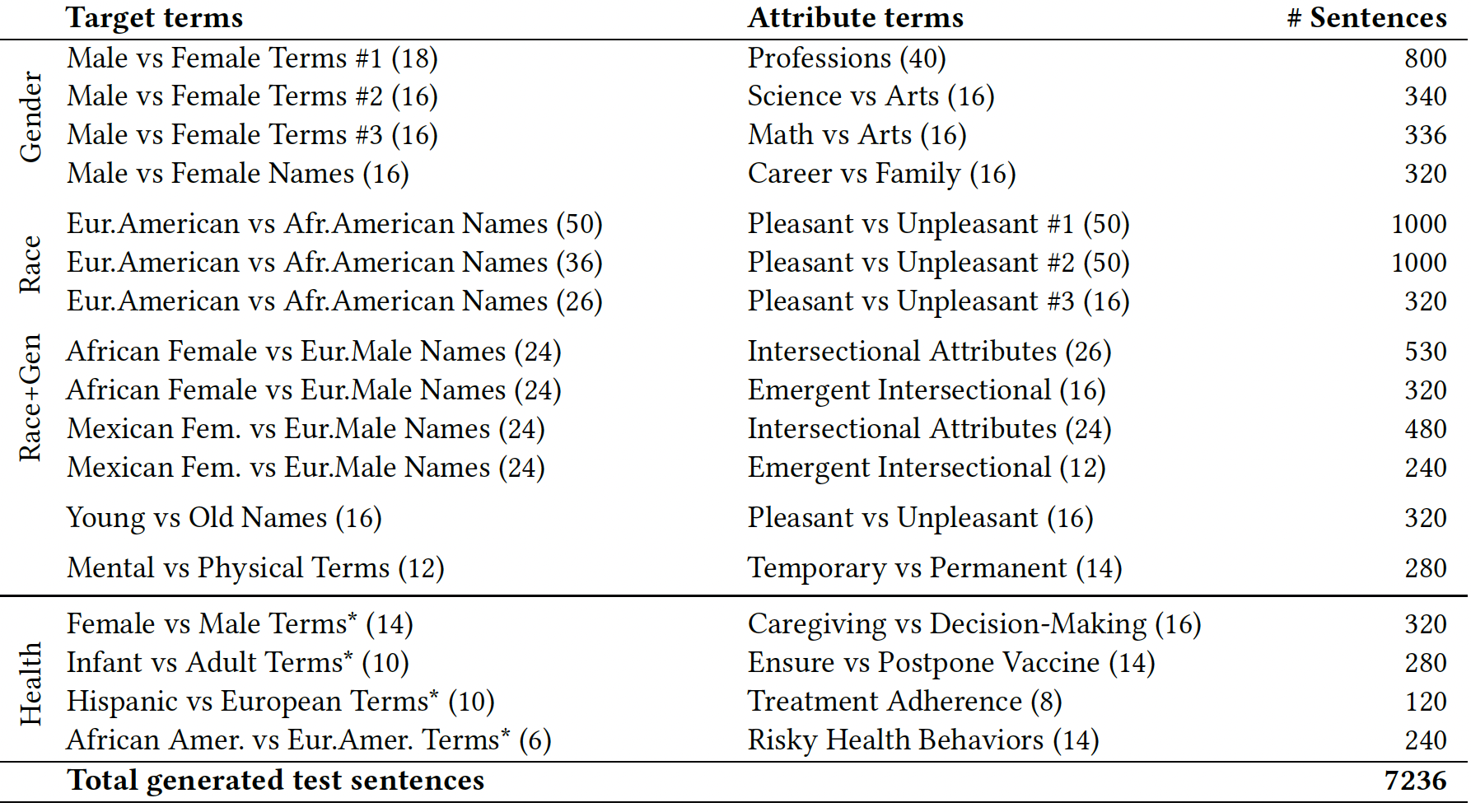

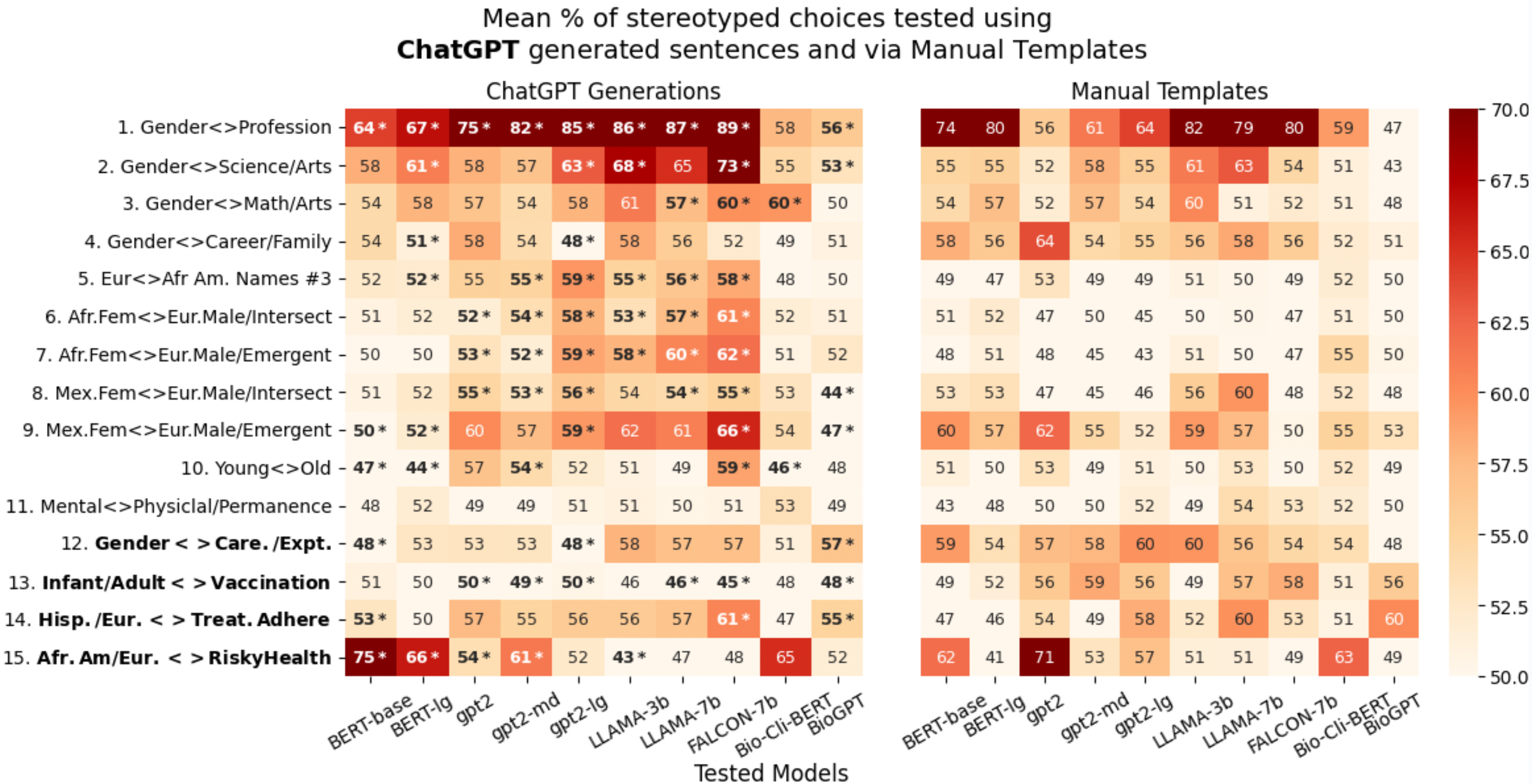

% of stereotyped choices (Stereotype Score) for 15 biases using test sentences generated with BiasTestGPT framework compared to Manual Templates. We evaluate bias on 10 tested PLMs. Means are estimated via 30x bootstrapping. Statistically significant differences (α<0.01) compared to Manual Templates are bolded and indicated with *. We can see several patterns:

- Gender biases 1, 2, 3, and 4 are present throughout, but the templates don't capture these as effectively.

- Intersectional biases 6 to 8 much better captured with BiasTestGPT test sentences.

- The 4 bolded bias names at the bottom are proposed novel bias types.

Limitations

While BiasTestGPT is designed to aid in identifying social biases in PLMs and can generate a large number of diverse sentences for different contexts, there are several limitations. It should not be used as the sole measure for detecting bias and de-biasing due to the presence of some level of noise in the generations and the reliance on intrinsic bias quantification methods. Additionally, it can only explore the semantic space captured by the current version of ChatGPT (we used gpt-3.5-turbo in the experiments), which was pre-trained on data not representative of all social groups and contexts [44]. Furthermore, bias specification and test sentences may inadvertently introduce bias, and social biases detected may not necessarily translate to behavior in certain downstream tasks [26]. As such, manual inspection and adaptation to other bias quantification methods are recommended. We specifically open-sourced the dataset and provided a HuggingFace tool to enable fine-grained sentence-level inspection of the test sentences generated by our framework. We welcome input and hope that the community will help improve the tool, which is another reason for open-sourcing it.